Running a Batch Job

Once created, Batch Jobs may be run from the Jobs list.

Select Jobs from the Navigation sidebar. The Jobs page is displayed.

Select the Batch Jobs tab.

Select the Environment where the Job definition is defined, from the Environment list box.

Find the Job that you want to run. Use the Filter search box to filter the Jobs if required.

Click on the Play icon in the end column alongside the Job that you want to run. The Run Job window is displayed.

Select the PDD that the Job will output data to from the Add to an existing Protected Data Domain list box.

Or, select Create new PDD to create a new PDD. Enter a name for the new PDD in the edit box along with all the other required details and click Save. (For more information, see Creating a Protected Data Domain.)

Click on the checkbox alongside each Schema table that you want to include in the Job.

Not all tables need be included. Only data corresponding to the selected tables will be processed.

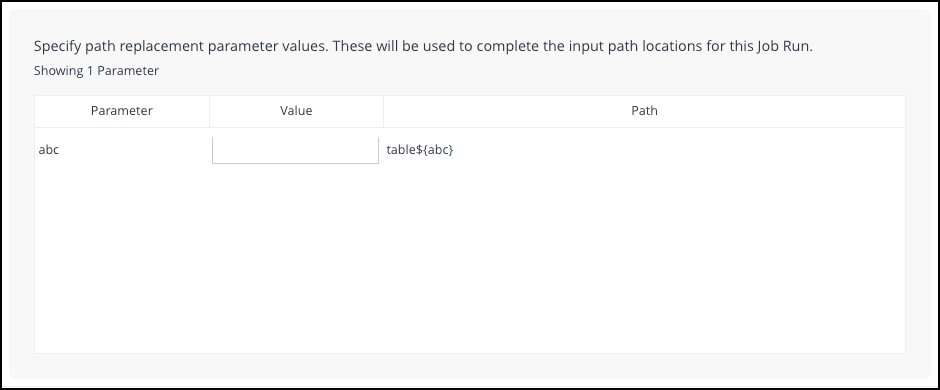

(Optional step) If there are any values that need to be specified for either Filters (for Hive Batch Jobs) or Path replacements (for HDFS Jobs), a window is displayed asking for the values to be specified. For example:

For more information, see:

For more information about setting up Filters, see Setting Filters for a Hive Batch Job

For more information about setting up Path replacements, see Partitioned Data and Wildcards (HDFS).

(Optional step) If the Privitar

application.propertiesfile has enabled the option to provide advanced diagnostic output for Jobs, an Advanced tab is displayed. Select this tab to configure the output.For more information about the options available from the Advanced tab, see Setting Diagnostic Options for Jobs (HDFS & Hive Batch Jobs).

Click on Run to start the Job.

Advanced Options

The settings described in this section are optional and do not need to be set in normal use.

Logging properties

Additional log4j Apache logging properties may be specified for the Job run.

Maximum Partition Size

Maximum Partition Size controls how much of an input file is processed in-memory at once by a Spark Executor. This setting can be used to control memory usage in the active Spark Executors. This setting is optional and should only be set if needed.

As an approximation, this value should be set according to the algorithm:

Maximum Partition Size < Executor Memory / (2 × Executor Cores)

AWS Glue Parameters

This option is only provided for Privitar AWS deployments.

The Executors option refers to the number of Spark executors that AWS Glue Batch Jobs will request to run a Batch job.

Note

Increasing this number will increase the cost of running this job in AWS. Contact your system administrator if you are in any doubt.