Configuring users

To run Data Flow jobs in the Privacy Platform you need to create API users with the correct permissions to run both Masking and UnMasking Jobs in the Team that the Data Flow Job is defined in. This section describes how to create and configure API users on the platform to run Data Flow Jobs. It is applicable for Data Flow jobs being set up on any of the following data processing platforms:

Kafka/Confluent

Apache Nifi

StreamSets

For more general information about managing Users, Roles and Teams in the platform, refer to the Privitar Data Privacy Platform User Guide.

Creating an API user to run Data Flow jobs

To create API users in the platform for running Data Flow jobs:

Select API Users from the Superuser navigation panel.

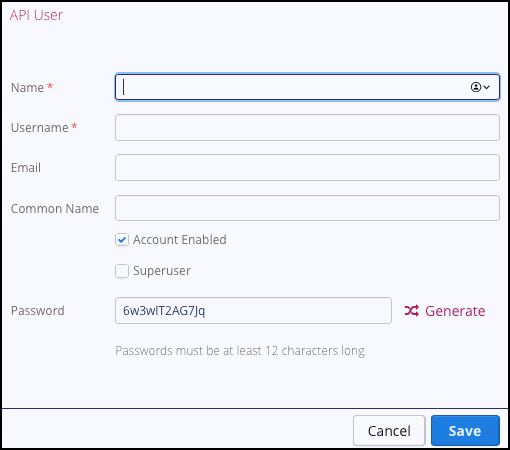

Select Create New API User. The API User dialog box is displayed:

Enter the details for the new API user. The first two fields - Name and Username - are mandatory. All other fields are optional:

Name is the display name of the API user.

Username is the unique username for the API user.

Email is the email address associated with the API user. This is an optional field.

Common Name is used for API authentication (if your platform installation is configured to use Mutual TLS) or Password (if basic HTTP authentication is used).

You can click on Generate to generate a new password.

To make sure the User account is activated, select the Account Enabled check box.

Optionally, if you want this new API User to have Superuser permissions, select the Superuser check box.

Click Save to save the details entered and to create the API user. The new API user will be added to the list of API users shown in the main window.

Typically, you would create two API users; one to run Masking jobs and the other to run UnMasking jobs. It is also possible for a single API user to run both jobs if required.

Note

It is also possible in the platform to manage users externally in LDAP. If managing users in this way, then instead of assigning individual API users to a team role, you need to assign an LDAP group to the relevant team role.

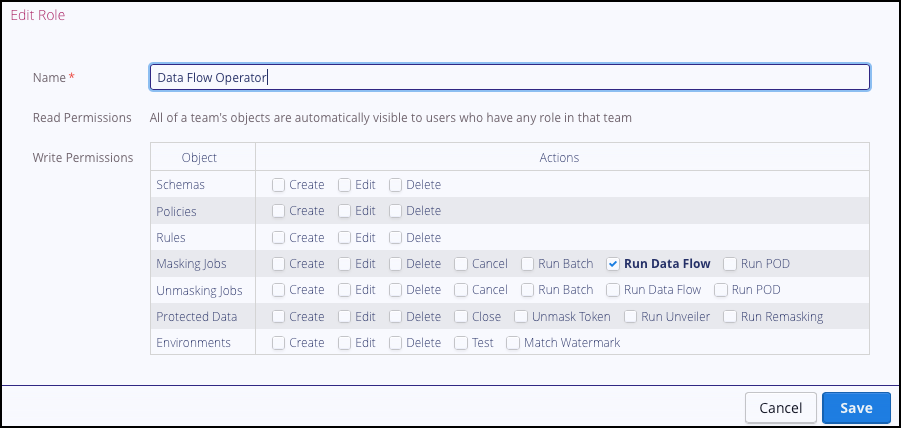

Masking Jobs

By default, the Data Flow Operator Role in the default Team in the platform has the Run Data Flow permission enabled for Masking Jobs. See:

The API user that has been created in the platform will need to be assigned the role of Data Flow Operator in the Team that the job is defined in.

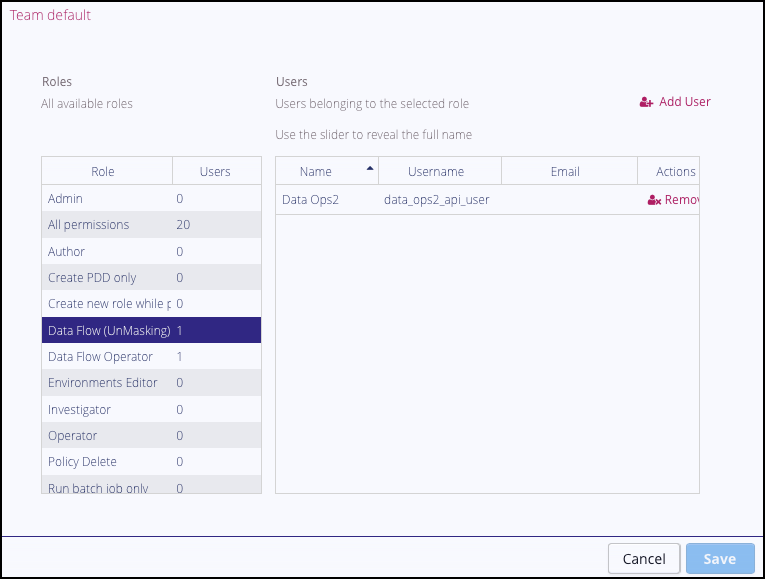

In the example below, an API user called data_ops_api_user has been created and assigned the role of Data Flow Operator:

|

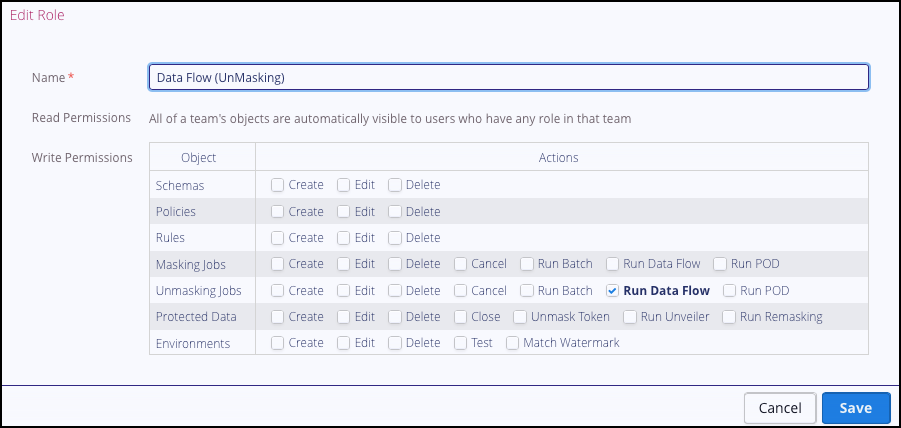

UnMasking Jobs

For UnMasking jobs, you need to assign an API user to a Role that has permission to Run Data Flow UnMasking jobs in the Team that the job is defined in.

In the example below, a new Role has been created called, Data Flow (Unmasking) with the Run Data Flow permission enabled for Unmasking jobs:

The new additional API user (data_ops2_api_user) can be assigned to the Data Flow (Unmasking) role: